Tableau Seeks to Empower Customers with Enterprise Platform Strategy

Tableau held its annual user conference TC17 in Las Vegas, celebrating the 10th anniversary of the event with 14,000 attendees and plenty of energy to keep everyone buzzing for days. Several notable announcements and themes from the event speak to Tableau’s strategy, focus and maturity. While the keynotes maintained a “Data leads to Truth” and myth-busting theme and annual attendee-favorite sessions “Devs on Stage” and “Iron Viz” were fun and better than ever, my hat is off to Tableau for their very dignified and respectful recognition of the tragedy that struck Las Vegas just one week earlier.

Celebrating his one-year anniversary with the company, CEO Adam Selipsky opened his keynote with company stats and appreciation for all of the fellow data people in attendance. Tableau now touts 61,000 customer accounts (up 15,000 in the last year) in 160 countries, and Tableau Public reached one million visualizations and one billion views. Tableau continues to gain traction with fully supported Tableau Server deployments in AWS, Azure and Google Cloud (with one-third of server trials on the cloud), and subscription-based Tableau Online now has more 7,500 customers.

Tableau is focused on becoming a mission-critical enterprise platform, evolving from their desktop emphasis of the past. They recognize the demands of an enterprise-class platform and importance of partnerships with IT to tackle security, governance, compliance and enterprise-grade support. Committed to becoming an enterprise standard also requires a mindset shift, and changes include subscription licensing, hybrid cloud and platform strategies and even a new sales framework that invests more in the partner ecosystem.

The enterprise focus presents potential challenges for Tableau, such as an enterprise’s tendency to value environment stability through longer integrated test cycles and fewer upgrades per year compared with Tableau’s 90-day release cycles and lack of support for releases beyond 30-months. In May 2018, many global companies will need to comply with the EU’s Global Data Protection Regulation (GDPR), yet Tableau claims GDPR is not in their top 10 customer priorities and, therefore, encourages companies to rely on processes and Tableau’s existing security features for compliance efforts.

Overall, 130 new features were added over the last year across categories of connectivity, data prep, analytics, enterprise, cloud, web and mobile. Four product development initiatives stood out among all the announcements at the conference: Extensions API, Hyper, Smart Analytics and Project Maestro.

Extensions API – the journey to becoming an enterprise platform

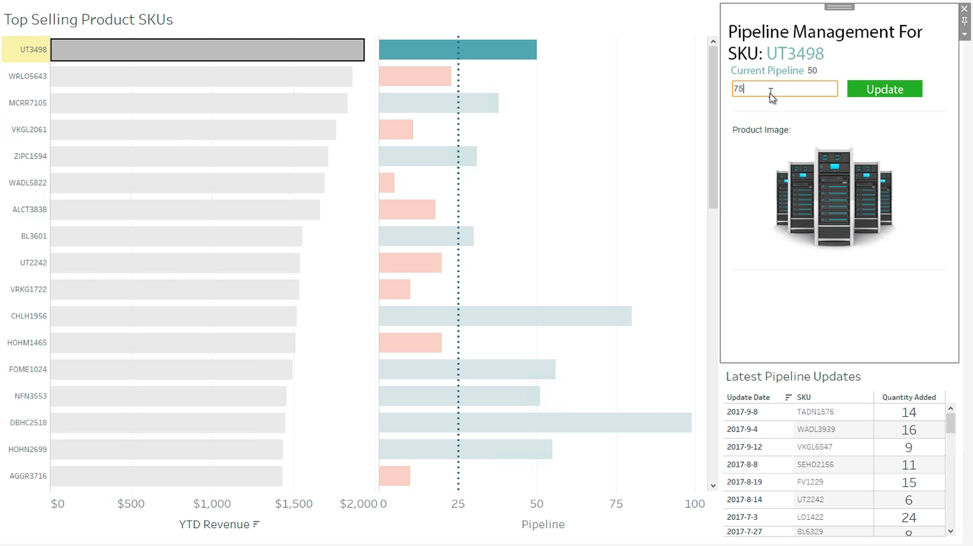

The biggest (and understated) news, in my opinion, is the Extensions API shipping in early 2018. Tableau has been evolving their integration capabilities over several releases with JavaScript, REST and Document APIs, but the Extensions API is a foundation that allows third-party developers to integrate their product right into the Tableau interface for a seamless user experience. Demo examples included displaying valuable information from the Alation Data Catalog right in Tableau, an inventory dashboard’s ability to “write back” to a data source when a user needs to correct data and a dashboard with the ability to provide a natural language “narrative summary” from Automated Insights natural language generation tool. Not only do these examples demonstrate Tableau user empowerment through integrating better context, revealing additional insights and taking action, but new third-party integrations will inevitably now follow. This will have a significant positive impact on Tableau’s integration with mission-critical enterprise applications and should become part of a company’s application and analytics integration strategy.

Hyper – the new champ

The big splash was the availability of Tableau 10.5 beta with the new Hyper database replacing the existing Tableau Extract Engine known as TDE. This wasn’t news for many following the HyPer acquisition announcement at last year’s conference but there were still impressive demos of how the in-memory database Hyper outperformed TDE in several challenger-versus-champ style “boxing rounds” of benchmark use cases for large-scale data source extracts, data exports and dashboard refreshes. Upgrading to 10.5 will be transparent to the user, converting TDE files as they’re used into Hyper files – a process that can be scheduled if desired because it takes a little bit longer the first time it’s executed. While many people were quick to download and try the new version, Tableau recommends that 10.5 beta remains in a company’s sandbox environment until its general availability release. Tableau shared that Hyper has been the engine behind Tableau Public for nearly a year now, providing substantial testing before releasing this beta version of Tableau Server.

The compelling Hyper database capabilities fuel the debate on whether Hyper exacerbates data silo and proliferation problems at companies with Tableau Servers, essentially becoming independent data marts. Fortunately, there was consistent messaging from multiple Tableau execs that they are not pushing a data architecture agenda but rather ensuring the user experience for those customers who are constrained by their underlying data sources’ performance. For companies that don’t want to extract data and have performant existing data sources, Tableau will connect directly to them and use Hyper where needed.

Smart Analytics – an eye on the future

Tableau intends to enable more people to work with data more easily and effectively than they can today through their Smart Analytics initiative. This vision includes research and development in four key areas – Recommendations, Model Automation, Natural Language Interaction and Automated Discovery – with some of these features available in the current release. With their recent acquisition of ClearGraph, Tableau’s work on the future of analytics and research in natural language processing (showcased last year as Project Eviza) has come into more focus, including the many facets of search and semantic models in different modalities not just in the data but across the Tableau platform itself. The Recommendations Engine looks to be broad, involving much of the data within the platform usage and leveraging user behavior (and similar-user behavior), item popularity, source and author and other contextual opportunities. Automated Discovery will assist users with asking new and deeper context questions that they didn’t realize to ask.

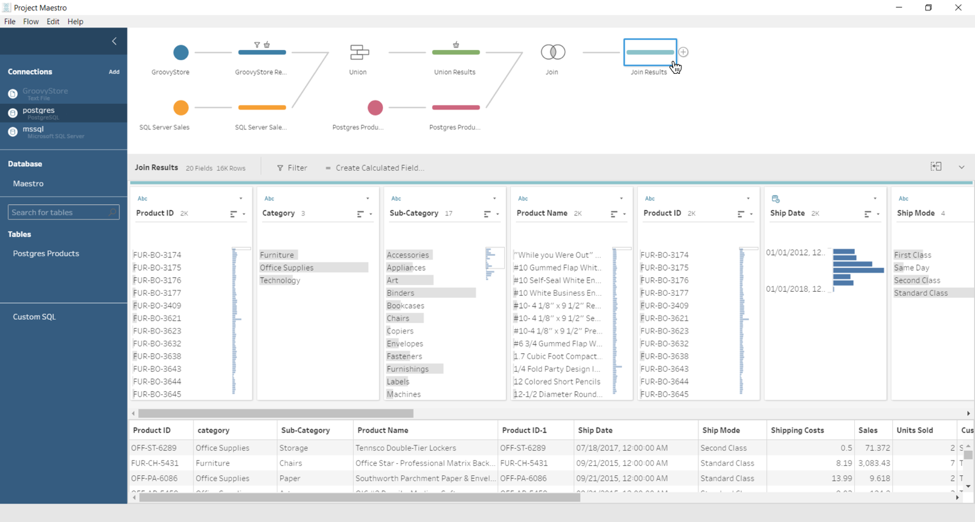

Data Prep and Project Maestro – still cooking in Alpha

Also announced at last year’s Tableau conference was an initiative called “Project Maestro,” with which Tableau puts their spin on data prep for the optimal user experience. In Alpha release now, several cool techniques were shown, but overall the data prep tool showed a lack of robustness and maturity compared with existing data prep market players – which is to be expected for an Alpha release. There’s likely to be a beta release “very soon” according to Tableau execs, but it’s still unclear at this point whether this will be integrated within the visualization tool, a standalone product, server or licensing. However, Tableau did say that the data prep tool would have to be compelling enough to a customer as a standalone product no matter how it was licensed.

Many of these announcements and themes did not come as big surprise following the vision that Tableau shared at last year’s TC16 conference. However, kudos to the developers in “Devs on Stage” for showcasing advances and how Tableau delivers many features that customers request, from nested sorting in a chart, density charts (heat maps), server features and the much-anticipated Tool Tips. Many of the features seem to close the gap with existing capabilities or exist behind the scenes in the Tableau Server’s enterprise and cloud capabilities (which end users may cheer for but IT and administrators do).

Tableau is admittedly at the early steps of its enterprise journey with challenges of data governance, compliance and IT management still ahead and their strategy to leverage customer partnerships to help them navigate. I look forward to TC18 in New Orleans next year to hear about all the progress of the coming year; next year maybe a few surprises would be nice.

Sessions from the conference are available for viewing at http://tclive.tableau.com/.

Author

John O’Brien is Principal Advisor and CEO of Radiant Advisors. A recognized thought leader in data strategy and analytics, John’s unique perspective comes from the combination of his roles as a practitioner, consultant and vendor CTO.